On 29 May 2025, the MIIT-Shanghai Humanoid Robot Innovation Center国家地方共建人形机器人创新中心, together with the School of Information Science and Technology at Fudan University, officially launched MindLoongGPT, the world’s first generative motion foundation model for humanoid robots.

MindLoongGPT offers multimodal interaction with a low entry barrier. It accepts text, voice, and video input, then the system generates smooth, corresponding actions. This eliminates the need for complex parameter tuning, making advanced robot control accessible to non-experts.

The model ensures continuity in motion sequences, preventing stiffness or abrupt transitions. Actions like running or dancing are not only smooth but also retain the natural rhythm of the human body.

It employs a staged generation strategy to achieve millimetre-level accuracy in both global posture and local joint movements. Whether it’s finger movements or full-body leaps, the output rivals human-level detail.

The model is only one-third the size of comparable models and can run in real time on educational robots and smart wearables, making it ideal for real-world applications. MindLoongGPT also allows users to adjust style, rhythm, and speed.

The model ensures fast adaptation between the human motion model and various robot hardware (URDF structures). It is compatible with mainstream robot operating systems like ROS and V-REP, allowing for direct import of generated motions and boosting deployment efficiency by 80%.

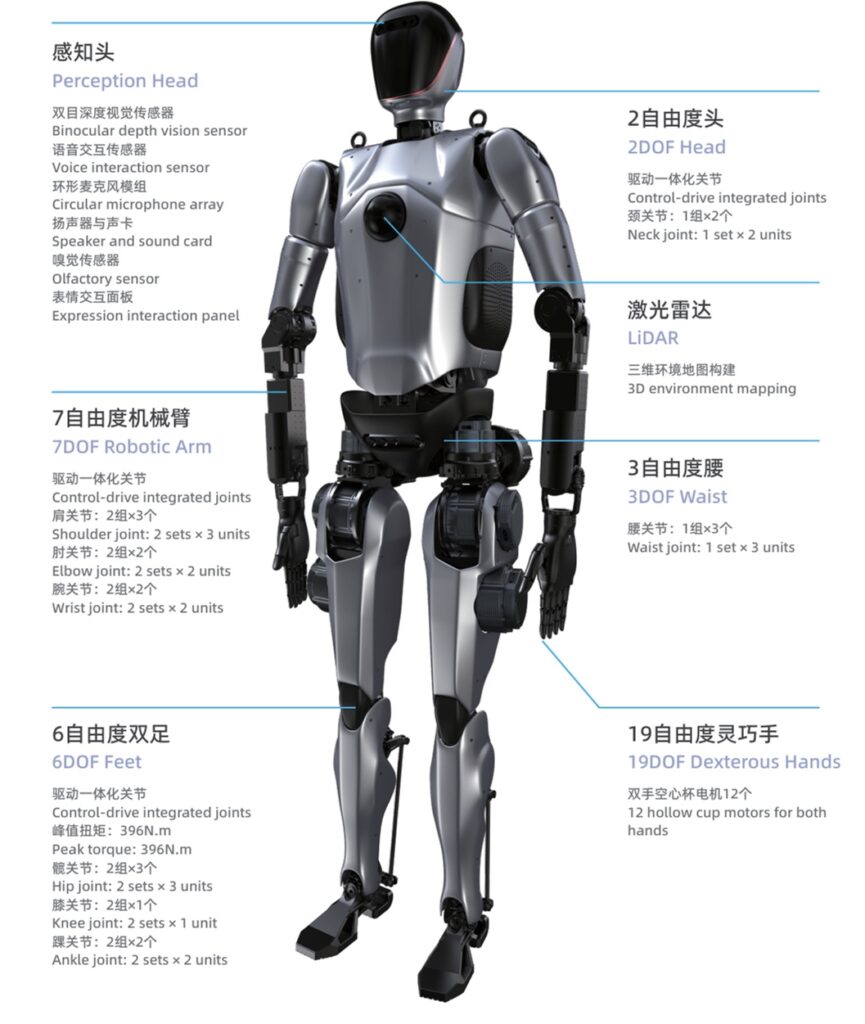

MindLoongGPT (7B parameters) supports multiple tasks including text-to-motion, motion-to-text, and motion-to-motion generation. The large model generates complex motion trajectories that can run on the AzureLoong (青龙)robot. A humanoid robot innovation base in Zhangjiang Science City will offer technical support, shared data, and compute power.

AzureLoong (青龙)robot (https://www.jfdaily.com/sgh/detail?id=1538884)